How to Generate Unique IDs in Distributed Systems: 6 Key Strategies

In a distributed environment, two nodes can simultaneously assign IDs, the challenge is ensuring these IDs remain unique, avoiding overlaps and ensuring system consistency.

Imagine you have two nodes operating concurrently in a distributed system and both are responsible for generating IDs for objects stored in a shared storage.

How do you ensure that these nodes produce unique IDs that won’t collide?

Various strategies cater to these requirements, with each being apt to these requirements:

Uniqueness: Every ID generated should be unique across all nodes in the system.

Scalable: The system should be able to generate IDs at a high rate without collisions.

Monotonically Increasing: (If needed) The IDs should increase over time.

I’ve come across many ways to generate IDs and together, let’s take a closer look at a few of them:

UUID.

NanoID.

Sequence.

ObjectID.

Twitter Snowflake.

Sonyflake (inspired by Snowflake).

Each method has its own benefits and challenges, as we go through each one, I’ll share my thoughts.

1. UUID or GUID (128 bits)

When talking about generating unique IDs, UUIDs or Universal Unique Identifiers come to mind.

A UUID is made of 32 hexadecimal characters. Remember, each character is 4 bits. So, all in all, it’s 128 bits. And when you include the 4 hyphens, you’ll see 36 characters:

6e965784–98ef-4ebf-b477–8bd14164aaa4

5fd6c336-48c4-4510-bfe5-f7928a83a3e2

0333be18-5ecc-4d7e-98d4-80cc362e4adeThere are 5 common types of UUID:

Version 1 — Time-based MAC: This UUID uses the MAC Address of your computer and the current time.

Version 2 — DCE Security: Similar to Version 1 but with extra info like POSIX UID or GID.

Version 3 — Name-based MD5: This one takes a namespace and a string, then uses MD5 to create the UUID.

Version 4 — Randomness: Every character is chosen randomly.

Version 5 — Name-based SHA1: Think of Version 3, but instead of MD5, it uses SHA-1.

…You may want to consider other drafts like Version 6 - Reordered Time and Version 7 - Unix Epoch Time, etc, among the latest proposals at ramsey/uuid.

I won’t go into the details of each version right now. But if you’re unsure about which to choose, I’ve found Version 4 — Randomness to be a good starting point. It’s straightforward and effective.

“Random and unique? How’s that even possible?”

The magic lies in its super low chance of collision.

Pulling from Wikipedia, imagine generating 1 billion UUIDs every second for 86 whole years and only then would you have a 50% chance of getting a single match.

“Why do some say UUID has only 122 bits when it’s clearly 128 bits?”

When people talk about UUIDs, they often refer to the most common type, which is variant 1, version 4.

In this type, 6 out of the 128 bits are already set for specific purposes: 4 bits tell us it’s version 4 (or “v4”), and 2 bits are reserved for variant information.

So, only 122 bits are left to be filled in randomly.

Pros

It’s simple, there’s no need for initial setups or a centralized system to manage the ID.

Every service in your distributed system can roll out its own unique ID, no chit-chat needed.

Cons

With 128 bits, it’s a long ID and it’s not something you’d easily write down or remember.

It doesn’t reveal much information about itself.

UUIDs aren’t sortable (except for versions 1 and 2).

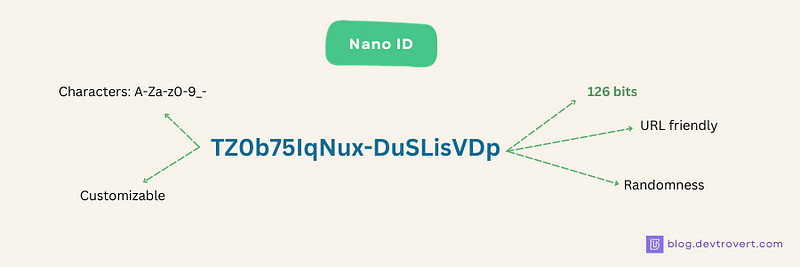

2. NanoID (126 bits)

Drawing from the concept of UUID, NanoID streamlines things a bit with just 21 characters but these characters are sourced from an alphabet of 64 characters, inclusive of hyphens and underscores.

Doing the math, each NanoID character takes up 6 bits, as opposed to the 4 bits of UUID and a quick multiplication, and we see NanoID coming in at a neat 126 bits.

NUp3FRBx-27u1kf1rmOxn

XytMg-01fzdSaHoKXnPMJ

_4hP-0rh8pNbx6-Qw1pMl“Does storing NanoID vs. UUID in a database make much of a difference?”

Well, if you’re saving them as strings, NanoID might be a bit more efficient, being 15 characters shorter than UUID, but in their binary forms, the difference is a mere 2 bits, often a minor detail in most storage scenarios.

Pros

NanoID uses characters (A-Za-z0–9_-) which is friendly with URLs.

At just 21 characters, it’s more compact than UUID, shaving off 15 characters to be precise (though it’s 126 bits versus UUID’s 128)

Cons

NanoID is newer and might not be as widely supported as UUID.

3. Sequences

Sequence or auto-increment might come to mind, as it’s the method that databases like PostgreSQL and MySQL commonly use

At its core, there’s a centralized counter ticking upwards, but picture a scenario with millions of simultaneous requests. This central point then turns into both a bottleneck and a potential single point of failure.

"So, what? We can't distribute the load or something?"

Absolutely. Instead of one centralized generator, each node can have its very own ID generator, incrementing as it goes:

Node A: 10 20 30 40

Node B: 1 11 21 31 41

Node C: 2 12 22 32 42

// Alternatively

Node A: a_1 a_2 a_3 a_4

Node B: b_1 b_2 b_3 b_4But, sorting becomes a bit tricky.

While the system is distributed, each node can still be a bottleneck, if a node is overwhelmed with countless requests, it will process them one after the other.

Pros

It’s a straightforward approach with the added bonus of sortable IDs, making it apt for small to medium-sized systems.

Cons

Doesn’t perform well under sudden, high-volume request spikes.

Removing nodes in a decentralized system can complicate matters

IDs from a decentralized model don’t follow a global sequence, complicating any sorting efforts.

4. ObjectID (96 bits)

ObjectID is MongoDB’s answer to a unique document ID, this 12-byte identifier typically resides in the “_id” field of a document, and if you’re not setting it yourself, MongoDB steps in to do it for you.

Here’s what makes up an ObjectID:

Timestamp (4 bytes): This represents the time the object was created, measured from the Unix epoch (a timestamp from 1970, for those who might need a refresher).

Random Value (5 bytes): Each machine or process gets its own random value.

Counter (3 bytes): A simple incrementing counter for a given machine

“But how does each process ensure its random value is unique?”

With 5 bytes, we’re talking about 2⁴⁰ potential values, given the limited number of machines or processes, collisions are exceedingly rare

When representing ObjectIDs, MongoDB goes with hexadecimal, turning those 12 bytes (or 96 bits) into 24 characters

6502b4ab cf09f864b0 074858

6502b4ab cf09f864b0 074859

6502b4ab cf09f864b0 07485aFor those acquainted with Go, here’s a peek at its implementation:

var objectIDCounter = readRandomUint32()

var processUnique = processUniqueBytes()

func NewObjectIDFromTimestamp(timestamp time.Time) ObjectID {

var b [12]byte

binary.BigEndian.PutUint32(b[0:4], uint32(timestamp.Unix()))

copy(b[4:9], processUnique[:])

putUint24(b[9:12], atomic.AddUint32(&objectIDCounter, 1))

return b

}Pros

Ensures a global order without needing a centralized authority to oversee uniqueness

In terms of byte size, it’s more compact than both UUID and NanoID.

Using IDs for sorting is straightforward, and you can easily see when each object was made.

Reveals the specific process or machine that created an item.

Scales gracefully, thanks to its time-based structure ensuring no future conflicts.

Cons

Despite its relative compactness, 96 bits can still be considered long.

Be careful when sharing ObjectIDs with clients, they might reveal too much.

5. Twitter Snowflake (64 bits)

Commonly known as “Snowflake ID”, this system was developed by Twitter to efficiently generate IDs for their massive user base.

Also, a Snowflake ID boils down to a 64-bit integer, which is more compact than MongoDB’s ObjectID

Sign Bit (1 bit): This bit is typically unused, though it can be reserved for specific functions.

Timestamp (41 bits): Much like ObjectID, it represents data creation time in milliseconds, spanning ~70 years from its starting point.

Datacenter ID (5 bits): Identifies the physical datacenter location. With 5 bits, we can have up to 2⁵ = 32 datacenter.

Machine/ Process ID (5 bits): Tied to individual machines, services, or processes creating data.

Sequence (12 bits): An incrementing counter that resets to 0 every millisecond.

“Hold on. 70 years? So from 1970, it’s done by 2040?”

Exactly.

Many Snowflake implementations use a custom epoch that begins more recently, like Nov 04 2010 01:42:54 UTC, for instance. As for its advantages, they’re pretty evident given the design.

You can take a look at how it’s implemented in Go with bwmarrin/snowflake:

func (n *Node) Generate() ID {

n.mu.Lock()

now := time.Since(n.epoch).Nanoseconds() / 1000000

if now == n.time {

n.step = (n.step + 1) & n.stepMask

if n.step == 0 {

for now <= n.time {

now = time.Since(n.epoch).Nanoseconds() / 1000000

}

}

} else {

n.step = 0

}

n.time = now

r := ID((now)<<n.timeShift |

(n.node << n.nodeShift) |

(n.step),

)

n.mu.Unlock()

return r

}Cons

Might be over-engineered for medium-sized businesses, especially with complex setups like multiple data centers, millisecond-level timestamps, sequence resets...

Limited lifespan, it has a lifespan of ~70 years.

It packs features some may find excessive, but for giants like Twitter, it’s right on the money.

6. Sonyflake (64 bits)

Inspired by Snowflake, Sonyflake makes a few alterations in the distribution of its bits:

Sign bit (1 bit)

Timestamp (39 bits): Sonyflake operates at 10 milliseconds, expands the duration coverage from ~70 years (like in Snowflake) to ~174 years

Machine/ Process ID (16 bits)

Sequence (8 bits): This permits 256 IDs every 10 ms, this is somewhat slower than Snowflake, it increases the chance of ID overlap during peak time.

Given its specifications, Sonyflake seems suitable more for small to medium-sized systems where extreme speed and scale aren’t important.

Reflecting on these methods, there’s flexibility here and you’re not confined to a one-size-fits-all solution.

Depending on your unique challenges and goals, there’s room to adapt these systems or even design a custom ID generator that aligns perfectly with your business needs.