What Is Consistent Hashing? The Backbone of Distributed Storage Sharding

Traditional hashing can cause data imbalance when scaling, learn how consistent hashing ensures balanced distribution across shards, even with node changes.

Before diving in, let’s go through a simple example to show the problems with traditional hashing.

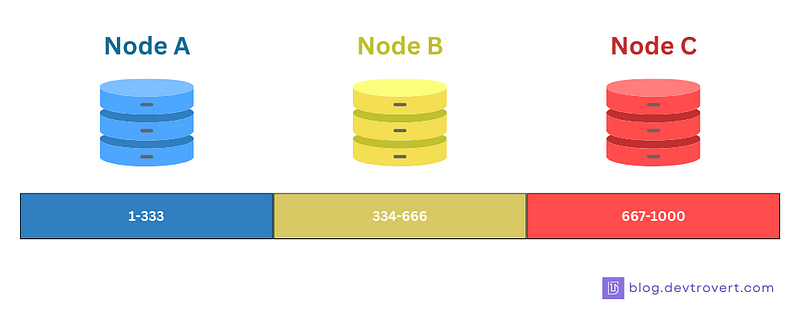

Tradition Hashing Example

In this instance, we have 3 nodes, and we’ll employ the mod operation for hashing (h = key mod 3) to distribute keys across these nodes:

Data items 1–333 land on Node A

Data items 334–666 find their place in Node B

Data items 667–1000 are allocated to Node C

So now, let’s introduce a new node into the mix, Node D.

Similar to other nodes, but we switch to mod 4, adjusting the distribution as follows: node A (1–250), node B (251–500), node C (501–750), and finally, node D (751–1000).

“What’s the snag here?”

You’ll see almost everything has moved around, meaning a lot of the 1000 data items now need to be shifted to make room for Node D.

This example only shows a small part of the problem, imagine the huge mess if we had 1,000,000 data items. So, it’s obvious we need a better hashing method to cut down on all this moving around.

Why Traditional Hashing Is Bad

I’ve outlined the main issue in distributed systems, particularly when scaling in or out, and the need for moving or reorganizing data.

Each time you add or remove a node in a standard system, a large part of your data needs to be moved around and this results in more network and I/O operations, increased delay, and possible system downtimes.

Take the example of a distributed cache, the issue becomes more serious. If a cache constantly needs to move its data around, cached items become unavailable and leading to cache misses. This undermines the goal of a cache, which is to have data ready and accessible quickly.

1. What is Consistent Hashing

When it comes to sharding and distributed systems, consistent hashing is a technique (or algorithm) used to ensure that when nodes are added or removed, only a minimal amount of data gets shuffled around.

How it works?

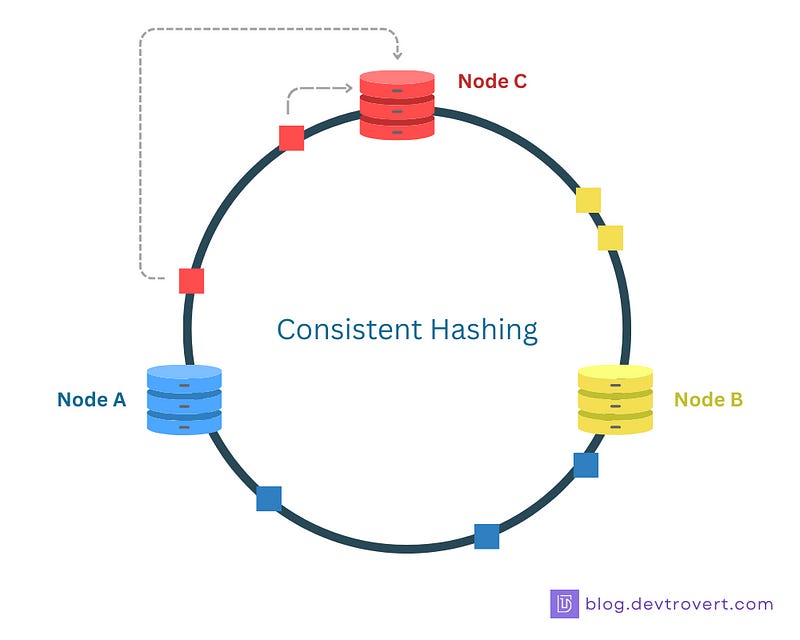

Instead of a straight line distribution, we place nodes and data on a circle (or ring), using a hash function to map both nodes and data onto this ring.

Data is assigned to a node by moving clockwise around the ring until it encounters the first node:

This method ensures a more balanced distribution of data even when nodes are added or removed, with only a small portion of the data (situated between two nodes on this ring) being reassigned.

Example for Consistent Hashing

Imagine a circle, where we place 3 nodes A, B and C based on their hash values. If a data item’s hash value falls between node A and node B on the circle, it gets stored on node B (we move clockwise right?).

Now, if we add another node D between A and B, only the data located between A and D will be reassigned to node D, while the data between B and C or C and A remains where it is.

Basically, the circle represents the entire possible output range of our hash function, starting from the minimum value (like 0) to the maximum value (like a 32-bit value).

And in most situations, hash functions give a fixed-size output and both nodes (servers) and data items (keys) are mapped onto this circle based on their hash value.

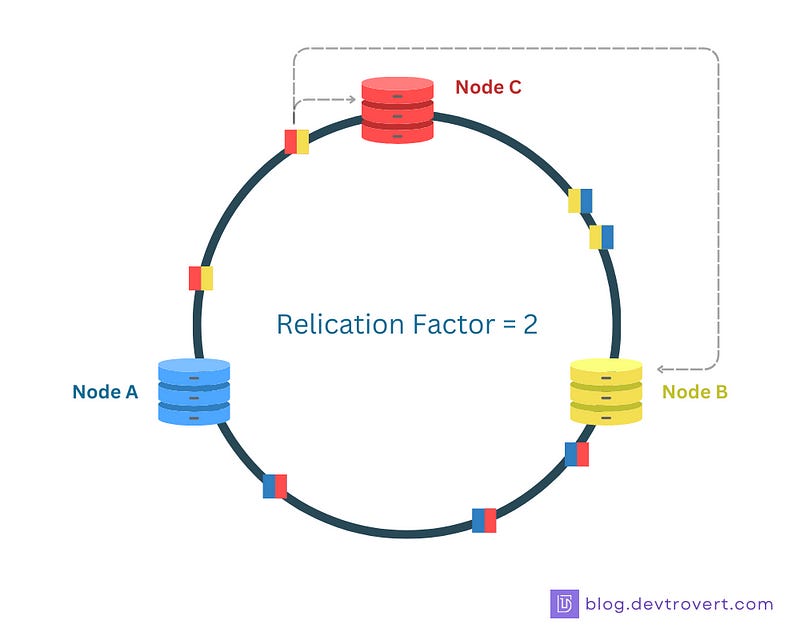

Data Replication

There’s more to consistent hashing, to avoid any single point of failure, data is copied onto multiple nodes. So, if the replication factor is 3, then 3 nodes in the ring will have the same copy of a data item.

The strategy for replication depends on the specific setup, in our example below, the data will be replicated clockwise until we reach n nodes, (replication factor = 2).

This means, even if one node stops working, the data is still available for our clients and this way of having backup helps keep our system available.

Now that you have the basics of what consistent hashing is.

“Any cases where consistent hashing does not distribute data evenly?”

It might occur.

Without virtual nodes, the even distribution of data relies mostly on your hash function and node changes. If you take a look at node D above, you’ll notice it’s not evenly distributed, so now, let’s delve into what virtual nodes are.

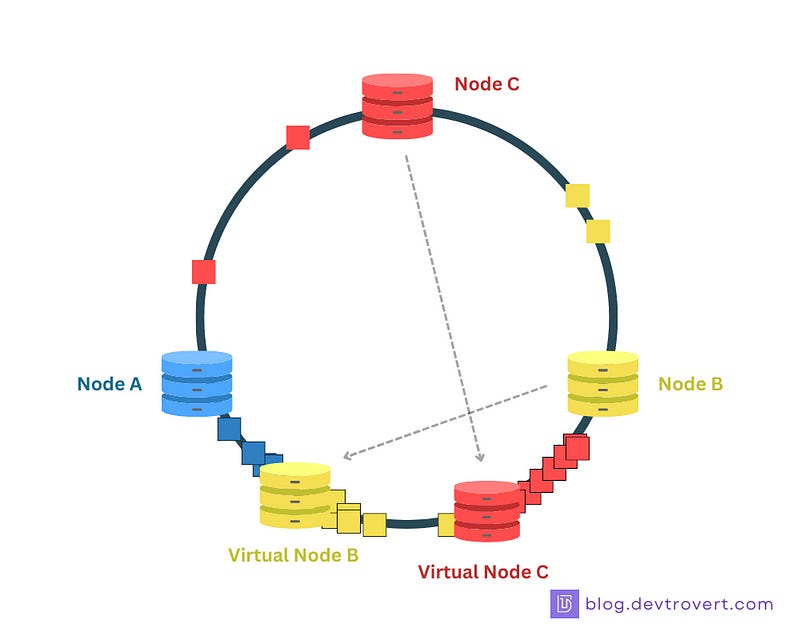

2. Virtual Nodes In Consistent Hashing

Virtual nodes (often referred to as “vnodes”) are not actual physical nodes or servers, but rather representations of the real nodes within the hash ring, with each physical node being associated with multiple virtual nodes on the ring.

The problem

Let’s say we are using consistent hashing with real nodes, and somehow, our data isn’t spread out evenly around the ring,… so what’s the issue here?

Well, a certain node might end up handling way more requests than other nodes, placing it under more stress and if that node goes down, a large number of keys will need to be moved around.

This way, we lose the main advantage of consistent hashing.

Virtual Nodes as a Solution

Virtual nodes address this problem, we will map each physical node to multiple points on the ring, so the more virtual nodes each node has, the more balanced the hash ring is.

Benefit of virtual nodes:

More balanced hash ring: Even if a few virtual nodes aren’t spread out evenly, the hash ring stays balanced.

Graceful handling of node failure/addition: When a node goes down or a new one is added, only a few virtual nodes are moved around, so the effect is small.

Flexible: You can adjust the number of virtual nodes for each node, or even turn off virtual nodes.

“So, if virtual nodes are so beneficial, why not just add more and more of them?”

Actually, there are some downsides: computational overhead and memory overhead.

The more virtual nodes we add, the more calculations are needed when looking up a key. Also having more virtual nodes increases memory overhead, since we need to store information and managing many virtual nodes requires more memory

Consistent hashing finds its application in numerous distributed systems like caches, databases, file systems…

For instance, in Memcache, consistent hashing helps ensure that only a small number of keys are reassigned to different servers, minimizing cache misses and in Cassandra, data is spread out across many servers efficiently.

“Is consistent hashing applicable to load balancing?”

I’ve heard from many: “Consistent hashing is a technique for distributing data across multiple nodes, not for load balancing”.

True, but there’s a nuance.

A load balancer can indeed employ consistent hashing to decide which server should process a given request.

By hashing certain aspects of the incoming request (like the IP address or the session ID), the load balancer can route specific users or sessions to the same server consistently, as long as the server pool remains unchanged.

When a new server enters or exits the pool, consistent hashing ensures that only a small number of sessions are impacted.

So, it’s a viable idea, isn’t it?